Amazon: How the E-commerce King Fell Behind in the AI Revolution

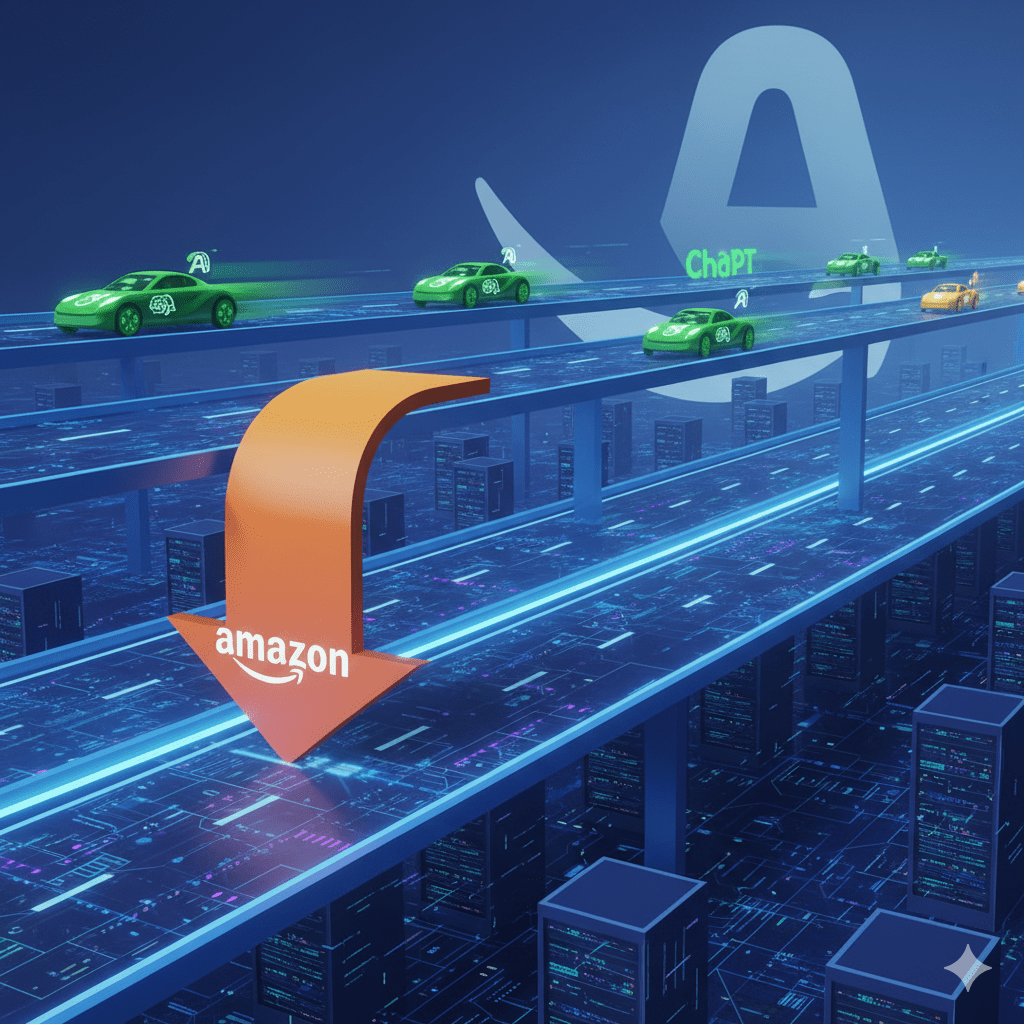

Amazon built the infrastructure that supports the AI revolution, but lost the opportunity to lead innovation in applications. The company that invented Alexa and dominates cloud computing was surprised by ChatGPT, revealing a fascinating paradox: owning the highways doesn’t guarantee controlling the traffic.

In one of the greatest ironies of recent technological history, Amazon - the company that provided the infrastructure to train GPT-3, Claude, and most AI models - found itself as a spectator when these same models transformed the industry.

The Infrastructure Leader Paradox

The Invisible Empire of AWS

Amazon Web Services is not just a cloud computing provider; it is the backbone of the AI revolution:

- 32% of the global market for cloud computing

- OpenAI infrastructure: GPT-3 and initial versions of GPT-4 trained on AWS

- AI startups: More than 70% use AWS to train models

- $90 billion revenue annually in cloud services

The Strategic Blindness Moment

Despite this privileged position, Amazon did not anticipate the revolution brewing on its own servers:

- 2022: ChatGPT launches using competitor infrastructure (Microsoft Azure)

- 2023: Amazon scrambles to create a coherent response

- Q1 2023: Andy Jassy publicly admits Amazon “didn’t see coming” generative AI

- Late reaction: 6 months after ChatGPT launch to present a clear strategy

The Origins: Amazon as AI Pioneer

Alexa: The First Mainstream Assistant (2014)

Amazon was not new to AI. In fact, it was a pioneer in conversational AI:

- 2014: Launch of Echo and Alexa

- Mass adoption: 100 million Alexa devices by 2019

- Complete ecosystem: Skills, smart home, voice commerce

- Massive investment: Billions in research and development

Machine Learning on AWS (2017-2020)

Amazon also led ML democratization:

- SageMaker (2017): ML platform for developers

- Rekognition: Computer vision as a service

- Comprehend: Natural language processing

- Textract: Text extraction from documents

The Uncomfortable Question

If Amazon had Alexa since 2014 and led ML in the cloud, why didn’t they create ChatGPT?

The “Lost Moment” Analysis

1. Focus on Existing Revenue Streams

Amazon concentrated on monetizing Alexa through:

- Voice commerce: Purchases through voice commands

- Smart home ecosystem: Connected device sales

- Skills marketplace: Third-party application revenue

- Advertising: Advertising in Alexa responses

2. Limited Technological Architecture

Alexa was designed for:

- Specific commands: “Play music”, “Tell me the weather”

- Structured responses: Brief factual information

- Service integration: Device control and purchases

- NOT open conversation: Complex and creative dialogues

3. Internal Innovator’s Dilemma

- Canibalization fear: Advanced conversational AI could reduce Echo sales

- Resource allocation: Priority on AWS growth over speculative research

- Cultural inertia: Success with current model created resistance to change

4. Market Underestimation

Amazon misinterpreted the signals:

- GPT-3 (2020): Seen as academic experiment, not commercial product

- Developer users: Didn’t recognize mainstream potential

- Indirect competition: Didn’t see OpenAI as threat to Alexa

The Response: Bedrock and Recovery Strategy

Amazon Bedrock (2023): The AI Platform

Amazon’s response came with Bedrock, but with a different approach:

- Multi-model platform: Access to Anthropic, Stability AI, AI21 Labs

- No own model initially: Aggregator rather than innovator

- Enterprise focus: B2B instead of B2C

- Infrastructure play: Leverage existing AWS ecosystem

Titan: Own Models (Late)

- Titan Text: Basic language model

- Titan Embeddings: For search and recommendations

- Titan Image: Image generation and editing

- Positioning: “Practical” models for enterprises

Anthropic Partnership

Amazon invested $4 billion in Anthropic:

- Preferential access: Claude available on Bedrock

- Training infrastructure: Anthropic uses Amazon chips

- Strategic hedge: If you can’t innovate, invest in who does

The New Alexa: Trying to Reinvent Itself

The Renovation Project (2024-2025)

Amazon announced a new version of Alexa powered by generative AI:

- Natural conversations: Beyond specific commands

- Advanced personalization: Learning family context

- Bedrock integration: Leveraging external models

- Premium subscription: Additional monetization model

Transition Challenges

- Installed base: 500+ million devices with specific expectations

- Operating costs: Generative AI is 10x more expensive than traditional commands

- Established competition: ChatGPT and Google Assistant already dominate natural conversation

- User experience: Changing established user behaviors

Competitive Analysis: Amazon vs The Field

Recoverable Strengths

- Unmatched infrastructure: AWS remains leader

- Established ecosystem: Millions of Alexa devices

- Enterprise relationships: Existing B2B connections

- Financial resources: Ability to invest massively

Structural Disadvantages

- Brand perception: No longer seen as AI innovator

- Talent drain: Top researchers go to OpenAI, Anthropic

- Legacy constraints: Existing Alexa limits radical innovation

- Late mover: Competitors have 2+ years advantage

The “Platform Play” Strategy

Amazon has pivoted to being the platform where others innovate:

- Bedrock: Host models from others rather than compete directly

- SageMaker: Enable companies to build their own AI

- Chips: Graviton and Trainium for cost-effective AI training

- Ecosystem: Let partners innovate while Amazon provides infrastructure

The Cost of Delay

Financial Impact

- Lost opportunity: Generative AI market valued at $280B by 2030

- Investment catching up: $17B spent in 2023-2024 on AI initiatives

- AWS growth slowdown: Competition from Microsoft Azure for AI workloads

- Alexa losses: Reports of $10B annual losses in devices division

Strategic Impact

- Narrative control: No longer dictates AI future

- Talent competition: Difficulty attracting top AI researchers

- Partnership dynamics: Dependency position vs leadership

- Innovation pipeline: Playing catch-up rather than setting agenda

Lessons from the Amazon Case

1. Innovation Is Not Linear

Having base technology (ML, cloud, voice) doesn’t guarantee creating breakthrough application.

2. Timing Is Critical

In tech markets, being 6 months late can mean years of recovery.

3. Culture Beats Strategy

Amazon’s “customer obsession” culture didn’t translate to anticipating this specific need.

4. Disruption Comes from Unexpected Places

OpenAI, not Google or Microsoft, defined the new era of conversational AI.

5. Platform Strategies Require Different Metrics

Amazon measured Alexa success in sales and device adoption, not conversational capability.

The Future: Can Amazon Regain Leadership?

Optimistic Scenario: “The Infrastructure Advantage”

- Bedrock becomes the standard: Multi-model platform preference

- Cost advantages: Cheaper to train on AWS than Azure/GCP

- Enterprise adoption: B2B focus proves more sustainable than B2C

- Alexa renaissance: New version captures public imagination

Pessimistic Scenario: “Permanent Follower Status”

- Commoditized infrastructure: AI models become less cloud-dependent

- Brain drain continues: Top talent prefers innovation over infrastructure

- Consumer mindshare lost: Alexa becomes legacy tech

- Enterprise disruption: New players bypass traditional enterprise sales

Most Likely Scenario: “Profitable Second Tier”

Amazon will probably:

- Maintain strong infrastructure position: AWS remains crucial

- Be follower in innovation: Others define future, Amazon enables

- Find profitable niches: Enterprise AI, specific verticals

- Lose consumer narrative: No longer “the future of AI”

Final Reflections

Amazon’s case illustrates a fundamental paradox of technological innovation: past success can be the biggest obstacle to future success. The company that revolutionized e-commerce and democratized cloud computing became a victim of its own success.

The Persistent Questions

- Was it inevitable? Could Amazon have anticipated ChatGPT?

- Is it recoverable? Can a company regain AI leadership after losing it?

- Does it really matter? Is it better to be the enabler than the innovator?

The Broader Lesson

Amazon reminds us that in technology, there are no permanent positions. Even the most solid giants can be surprised by paradigmatic shifts. The key is not to avoid being surprised - it’s impossible - but to maintain agility to respond quickly when it happens.

Amazon’s AI story is still being written. But one thing is clear: the company that once defined the future of e-commerce must now settle for being an important, but not dominant, player in the future of artificial intelligence.

The Amazon case demonstrates that in the AI era, building the highways doesn’t guarantee controlling the traffic. Sometimes, the real innovators are those who use your own infrastructure to disrupt you.